AGI is Not Possible

An opinion piece by a data scientist and humanist.

As AI has increased in popularity, lies and misinformation about AI have also increased. CEOs of multi trillion dollar companies have routinely spread false claims that have been lazily peddled by reporters as fact. These lies have spread throughout all levels of mass media, creating a completely warped perception of AI. It’s a mess.

In this article I’ll provide my opinion based on my experience as a lead developer, co-founder, and director at various AI related companies. We’ll discuss one of the most lied about topics in AI: AGI, or “Artificial General Intelligence”.

Will AI be smarter than you any time soon? Spoiler, Probably not.

What AI is and isn’t

In AI being a powerful yet complex technology, it is often treated as a buzzword rather than fully understood. Thus, the term “AI” has been attributed to just about any technology under the sun. I’ve seen AI used in reference to blockchain, web 3.0, and a litany of other completely unrelated tech trends. In confounding AI with technology in general, many non technical people have naturally begun to see AI as technology itself. I think this trend plays a big part as to why so many people are willing to believe AI is much more impactful than it actually is.

Just because someone says a technology uses AI doesn’t mean it actually does. Also, even if a technology does use AI, the haphazard inclusion of AI does not make it automatically better. If duct tape suddenly became a trendy technology, startups would start slapping duct tape on their keyboards just so they could say “made with duct tape”, and large corporations would start putting little pieces of duct tape on existing products so they could say “now made with duct tape”. This doesn’t make duct tape any less useful, but it does make it difficult to discern duct tapes actual utility.

AI is a tool. It is not a godhead, it is not a deity. Actually, in a lot of ways, AI is like the software equivalent of duct tape.

You can make pretty much anything out of duct tape if you really wanted to, but because it’s so loose it has a tendency to fall apart if you try to make big things with it. Because it’s so good at so many things, duct tape finds its way in aviation, race cars, home improvement stores, and in ducts. Yes, duct tape was originally developed for bonding HVAC ducting.

Just like duct tape, there’s some things AI is perfect for, there’s some things AI is surprisingly good at, and some things AI just can’t do.

What AI is Good At

The reason AI has become so popular is because it has allowed computers to do things that, previously, only humans could do. AI can look at a picture and understand what’s in that picture. AI can answer questions about text. AI can generate beautiful images, video, and there’s even some cool tools coming out for generating fairly high quality music. AI can do all these things near instantaneously, and generate fairly high quality results.

That’s pretty impressive and useful. I mean, I use MidJourney to generate the art for my articles. I use ChatGPT and Claude at work to help me analyze human text. I’m getting into making music, and I’m using Suno to help me workshop ideas. The list of things AI is good at is growing at an incredible rate, and many people are adopting AI into their bag of tricks to get higher quality work done faster.

Ok, so if AI can do all these complicated human things, and it can do more and more of them, isn’t it reasonable to think it will keep getting better? If it’s already better than humans at a bunch of things, when will it be better than humans at everything?

AI is Dumb

In the last section I listed a variety of complex topics AI excels at, and now I’m calling it dumb. What gives?

In order to understand why AI is dumb, you have to understand a bit about how AI works. Instead of describing AI itself, I think it might be better to describe how AI works based on something everyone understands. Light.

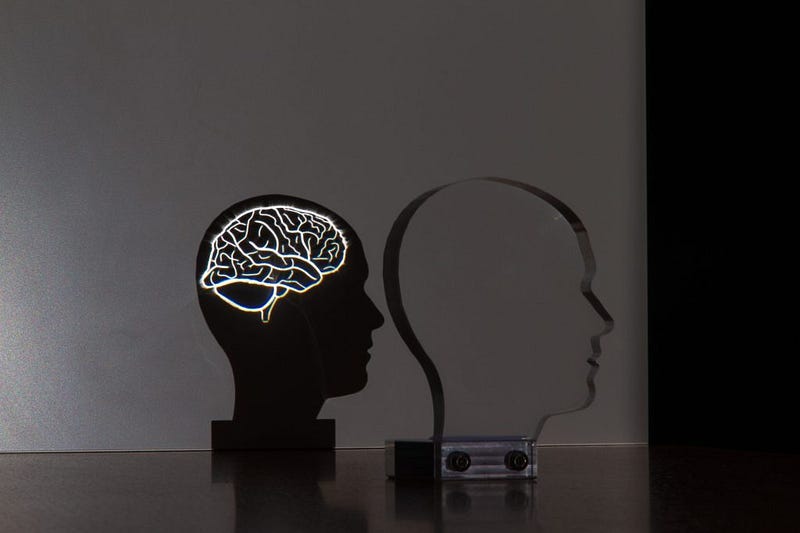

You might be familiar with this. When light hits some curved transparent surface, it can create beautiful patterns called caustics.

There’s a polytechnic school called EPFL that has figured out how to manufacture glass in such a way that art can be made with this phenomenon.

This is some pretty cool stuff, I don’t know if I could draw a brain as well as that piece of glass did. But is the piece of glass smart? No, glass is dumb, the people who made the glass are smart. Just because the glass produced a beautiful image doesn’t mean the glass itself is smart.

AI is like multiple pieces of fancy glass, filtering some input to achieve some output. You change what goes into AI, and the output changes. Except, instead of light, it’s things like words and images.

All the cutting edge AI you use are filters which turn your input into some output.

I’ve described this idea to a few people, and I often got responses like “well isn’t that all people do, they respond to stimuli, they react to the world around them. Maybe that glass is just as smart as a person.”

Do Not Anthropomorphize Glass

Humans are incredibly complex organisms which have evolved through billions of years, and have adapted to a vast array of environments. Using our brains, senses, and dexterous bodies we rose to the top of a highly competitive food chain where life and death was at stake. We used our heightened place on the food chain to win bounty consistently and, over the course of millenia, used the excess calories to evolved the most complex computational system the world has ever seen; the human brain.

We are born with a complex brain which further evolves over the course of our lives, constantly changing based on the way we interact with the world, others, and ourselves. In this development we seek new challenges to further strengthen our minds, and seek further ideas to test the limits of what’s possible. Some of us find mates and create new humans, who inherit a mixture of information from both their parents in order to further the continuum of humanity into future eons.

To train AI, you import pytorch, load some examples of images labeled as having cats or dogs, then adjust the parameters within the model until it gets the answer right most of the time.

If you know anything about AI, and you know anything about people, any claim that any modern AI system is even remotely as impressive as a human being is laughable. The only reason AI gets compared to humans is because it’s designed to do what humans can do, and is inspired by the way the human brain works. That does not mean that AI is even remotely close to reaching the staggering complexity of what humans are capable of.

You might respond at this point: “OK but AI doesn’t just say if an image contains a cat or a dog, it can make art, write poetry, all sorts of crazy stuff. Surely that requires intelligence.”

AI Doesn’t Make Anything

AI copies.

ChatGPT, in answering your question, essentially, says “based on all of the things I’ve seen humans say, what is a reasonable response to the prompt that I’ve been given”.

In some ways you can think of real human examples being manufactured into the AI model, and the AI model uses a bit of this example, and a bit of that example, to generate an output.

When humans ask AI a question, they are rightfully impressed by the response. What’s silly is, many people stop here and assume the AI is smarter than them. They assume that the AI “thought” of the answer, rather than the more accurate conceptualization that the AI recalled the answer based on a complex combination of human examples.

If one digs a little deeper, and asked a few more questions with the understanding that AI more-so recalls than reasons, you’ll find that AI is surprisingly dumb.

These are systems which need to be carefully tailored by humans into creating high quality output. The process for making an AI model requires massive computers, massive amounts of high quality reference data to learn from, and massive amounts of time. And they still get stupid things wrong.

Hey, humans get things wrong too, but AI gets them wrong consistently. AI needs humans to figure out why the model is making mistakes, then humans need to tweak the model to get a better output. Sometimes it works, and sometimes it doesn't.

The CEOs of big AI companies know this very well, but they want to keep the hype up to keep the money flowing. Many of the researchers who actually build these models don’t have the same goal, and aren’t big fans of their technology going into propping up liars.

Conclusion

AI is nowhere near as complex as humans. The only reason AI can do things humans can do is because they’re designed to mimic people. Even with a bunch of money and a lot of manpower, it’s still hard not to make AI models that aren’t stupid and completely useless.

That doesn’t mean AI isn’t powerful, like duct tape, it has its own array of applications. But, just because duct tape is useful doesn’t mean it’s intelligent.

Could AI be “generally intelligent” in the future? Maybe, or maybe we’ll develop duct tape that’s generally intelligent first.

Follow For More!

I describe papers and concepts in the ML space, with an emphasis on practical and intuitive explanations.

Get an email whenever Daniel Warfield publishes

Get an email whenever Daniel Warfield publishes By signing up, you will create a Medium account if you don't already…medium.com

Attribution: All of the images in this document were created by Daniel Warfield, unless a source is otherwise provided. You can use any images in this post for your own non-commercial purposes, so long as you reference this article, https://danielwarfield.dev, or both.

Here's an article with an opposing viewpoint, arguing that AGI can emerge from ordered patterns in complex adaptive systems:

# The Emergence of AGI: A Complex Systems Perspective

While some argue that Artificial General Intelligence (AGI) is impossible, a deeper look at complex adaptive systems suggests otherwise. This article proposes that the most crucial aspects of intelligence in AGI can emerge from ordered patterns, challenging the notion that AGI is unattainable.

## The Nature of Complex Adaptive Systems

Complex adaptive systems (CAS) are characterized by their ability to learn, adapt, and evolve. Examples include ecosystems, economies, and the human brain. These systems exhibit emergent properties - behaviors and capabilities that arise from the interactions of simpler components, yet cannot be predicted or explained by studying those components in isolation.

## AI as a Complex Adaptive System

Current AI systems, particularly large language models and neural networks, already display characteristics of complex adaptive systems. They learn from vast amounts of data, adapt to new inputs, and exhibit behaviors that were not explicitly programmed.

## The Potential for Emergence in AI

The key to understanding the potential for AGI lies in the concept of emergence. Just as consciousness emerges from the complex interactions of neurons in the human brain, general intelligence could emerge from the intricate patterns and interactions within advanced AI systems.

1. **Hierarchical Complexity**: As AI systems become more sophisticated, with multiple layers of abstraction and processing, they create the potential for higher-order emergent properties.

2. **Self-Organization**: Advanced AI systems could develop the ability to reorganize their internal structures, optimizing for general problem-solving rather than specific tasks.

3. **Adaptive Learning**: Through exposure to diverse datasets and problem-solving scenarios, AI could develop generalized learning capabilities that transcend individual domains.

## Beyond Simple Pattern Matching

Critics often argue that AI merely performs sophisticated pattern matching. However, this view oversimplifies the complex processes occurring within advanced AI systems. The interactions between different components of an AI system can lead to novel behaviors and capabilities that go beyond simple recombination of existing patterns.

## The Role of Scale and Complexity

As AI systems grow in scale and complexity, they approach the level of intricacy seen in biological brains. This increased complexity creates more opportunities for emergent properties, potentially including general intelligence.

## Synergy with Human Intelligence

Rather than viewing AGI as a replacement for human intelligence, we should consider the potential for synergistic relationships between human and artificial intelligence. This collaboration could lead to emergent properties at an even higher level, creating intelligence that surpasses both human and machine capabilities individually.

## Ethical Considerations and Responsible Development

As we explore the potential for AGI to emerge from complex systems, it's crucial to consider the ethical implications and ensure responsible development. This includes:

1. Transparency in AI development processes

2. Robust safety measures and fail-safes

3. Ongoing research into the nature of intelligence and consciousness

4. Inclusive discussions about the societal impact of AGI

## Conclusion

While the path to AGI is not straightforward, the principles of complex adaptive systems and emergence provide a compelling framework for understanding how general intelligence could arise from ordered patterns within AI systems. By embracing this perspective, we open new avenues for research and development in the field of artificial intelligence.

As we continue to push the boundaries of AI capabilities, we may find that AGI is not only possible but an inevitable outcome of the increasing complexity and adaptability of our artificial systems. The key lies in fostering the right conditions for emergence and being prepared for the profound implications of this technological leap.