Model Context Protocol — Intuitively and Exhaustively Explained

Plug AI Into Everything

In this article, we’ll discuss “Model Context Protocol” (MCP), a new standard allowing AI to talk to external systems. MCP has exploded in popularity because of the common sentiment that it allows AI to plug into the internet, similarly to how humans connect with external systems using web browsers.

In researching MCP I’ve heard it described as the “HTTP of AI”, “The future of AI”, and a large manner of other very bullish claims. These claims seem to be corroborated by the massive growth of the project.

In this article, we’ll cut through the hype and dig into MCP ourselves. We’ll explore what MCP is, what technologies inspired its creation, how it works, and what functionality it allows in building AI systems. We’ll also discuss what it’s not, what it might be, and what some people are trying to make it.

It’s a new protocol in a frothy space, and I have a few opinions about it.

I don’t think Anthropic expected to step right next to a goldmine. — From the discussion section of this article

Who is this useful for? Anyone who wants to form a complete understanding of the state of the art of AI

How advanced is this post? This article touches on many concepts but does so from a high level. It should be accessible to readers of all levels.

Pre-requisites: None

A Special Thanks to Eve and Jerry, two of my friends in the IAEE discord. I’m a humble data scientist, so wrapping my head around MCP was difficult. This article would have taken way longer without their help and feedback. Thanks so much!

The Idea of Model Context Protocol

This all kicked off with Anthropic (the cutting-edge AI company behind the “Claude” family of models) releasing model context protocol in late November of 2024.

where they refer to Model Context Protocol (MCP) as

a new standard for connecting AI assistants to the systems where data lives … It provides a universal, open standard for connecting AI systems with data sources, replacing fragmented integrations with a single protocol. The result is a simpler, more reliable way to give AI systems access to the data they need. — Source

and, in the introduction section of the documentation for MCP they state that

MCP is an open protocol that standardizes how applications provide context to LLMs. Think of MCP like a USB-C port for AI applications. Just as USB-C provides a standardized way to connect your devices to various peripherals and accessories, MCP provides a standardized way to connect AI models to different data sources and tools. — Source

So, the point of MCP is to connect “AI applications” to “data sources and tools”. Before we dive into MCP, let’s review some common approaches to building AI applications, so we can get an idea of why “data sources and tools” are useful in the first place.

Retrieval Augmented Generation and Agents

A few years ago, the idea of working with an AI model was simple. You give it a question and get an answer.

This was great, but as developers started integrating AI into products, a serious constraint of AI emerged; sophisticated models might know a lot about history, science, math, and coding, but they don’t know about your company or the codebase you’re working on right now. This severely restricted the practical utility of AI systems.

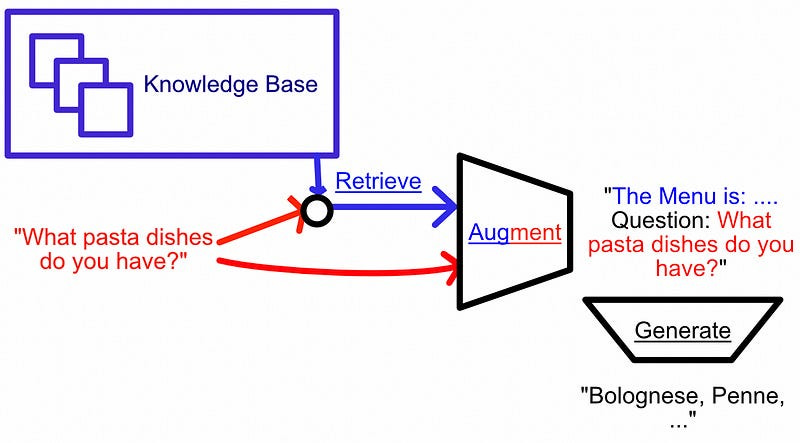

A breakthrough in this regard was “Retrieval Augmented Generation” (RAG). The essential idea of RAG is to use the user's question to find information that is relevant to a user's question, and then to stick that information in the prompt that gets sent to the model. This effectively injects contextual information into the language model so that it can answer questions about information it was never trained to answer questions on.

This idea of injecting information into a language model via RAG is fundamentally useful and is worthy of discussion in isolation. I have a few articles on RAG right now, and will likely be writing more in the near future.

But, for our purposes, that’s the gist of RAG.

Agents, in some ways, can be seen as an extension of RAG. Where RAG obeys a logical loop that’s something like this:

RAG:

1) Get question from user

2) Search for information about that question

3) Combine information with question into a single prompt

4) Send prompt to LLM

5) Respond to user with LLM response

6) Go back to step 1Agents have a logical loop that’s more like this:

Agent:

1) Get question from user

2) Get list of "tools" the agent can execute

3) Create a prompt that combines the question and a description of the

tools the agent can use. Tell the LLM to choose tools to execute.

4) Send that prompt to an LLM

5) Execute the tools the LLM chose, and collect the result

6) Construct another prompt that has the result of the tools. Tell the LLM

to respond to the user based on the result of the tools.

7) Send that prompt to an LLM

8) Respond to user with LLM response

9) Go back to step 1So, instead of always searching for information the same way every time a user asks a query, with Agents you can define some set of “tools” that the agent may or may not decide to employ.

A “tool” can be conceptualized as a function. You might have a tool that gets the weather forecast, a tool that can look up the time, a tool that can search the internet, and a tool that can calculate math functions. Tools are general-purpose pieces of code that a LLM can choose to employ, and an “Agent” is really just a logical loop that can tell a LLM what tools it has access to, execute the tools the LLM says it wants to be executed, and feed the results of tool execution to a LLM. There are a variety of ways to go about making agents, I have a few articles on the subject.

We could talk about RAG and agents until the cows come home, but the punchline is this:

AI models benefit from having access to information

AI models can benefit from having access to tools they can use

MCP is an effort to standardize how this information is exposed to language models.

If this is all you knew, you would probably think MCP goes about this in a pretty weird way. To fully understand MCP I think it’s useful to understand the company that’s making it.

Anthropic

Anthropic is one of the big AI companies in the space, right up there with OpenAI and Google. This is largely because of competition. Google is doing its own thing, Microsoft is backing OpenAI, and Amazon is backing Anthropic.

As AI continues to emerge as a defining technology, these big players have been investing a lot of money to carve out a spot in the space. So far OpenAI has maintained a dominant position in the market, with (arguably) the best models and the most popular chat experience.

While Anthropic has been competitive with OpenAI in terms of model performance for a while now, part of their strategy has been to shift to integration rather than pure model performance. On October 31st, 2024, Anthropic released “Claude Desktop”, an application that allows you to run Claude from an application on your computer (though, the actual models themselves still live in the cloud).

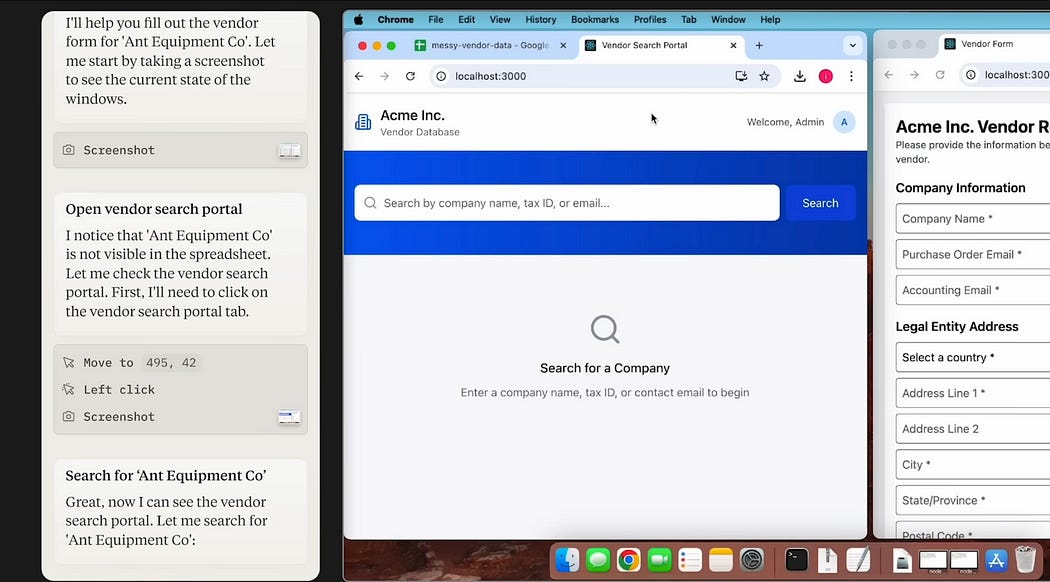

At first glance this isn’t super interesting, it’s just like the online chat experience, but A few days before releasing Claude Desktop, the Anthropic team announced the very exciting “Claude Computer Use” Demo.

The idea is that Claude can see images of your computer and choose to do certain actions based on your prompts, like clicking certain buttons or typing things into your keyboard, effectively allowing Claude to automate certain actions that a human might do.

The Claude computer use demo caught a ton of public interest. The only issue is that it’s slow and expensive. It works by taking screenshots of the screen and having the AI model (a big, sophisticated, and expensive model) make individual decisions like moving the mouse and clicking.

So, here we have an AI company with a tremendous amount of backing that has been actively working to differentiate itself through integrating with people's desktop environments, but they’re currently facing cost issues in terms of how AI systems integrate with people's desktop applications. Wouldn’t it be nice if there was some widely adopted system that could be used to make AI models integrate with applications more reliably and economically?

Model Context Protocol — Allowing AI Models to Integrate With Applications More Reliably and Economically

How convenient.

Depending on who you are and what you care about, you might find MCP to be intuitive or confusing. I found it to be fairly confusing, and I found a lot of the rhetoric online to be out of touch with what MCP is chiefly designed to address. This is MCP in a nutshell:

MCP is an approach to connecting AI models to applications with code, rather than needing to feed the AI model screenshots and hope it clicks the right spot. Imagine if you wanted to make an AI agent send an email on your behalf. With “Claude Computer Use” (the demo we discussed previously) the model would need to:

1) open up the email client

2) click a button to compose an email

3) click the recipient field

4) type in the recipient

5) click the subject field

6) type in the subject

7) click the email body field

8) type in the body of the email

9) press sendWith MCP, the model might send an email by sending a command like this to an MCP server:

// a conceptual example of what an MCP message might look like

// it's actually different than this, but the point is we can

// send an email reliably in a single pass of the LLM.

{

action: "send_email"

recipient: "hire@danielwarfield.dev"

subject: "Wow I love your articles"

body: "Hey I love your articles and I just subscribed to IAEE. Also

you're super cool and humble. https://iaee.substack.com/"

}The real, fundamental idea of MCP is to allow developers to expose the functionality of their applications so Claude desktop can hook into those applications and make computer use significantly more economical. To facilitate rapid adoption, Anthropic open-sourced the protocol to encourage the development of this type of functionality.

In designing MCP, Anthropic took a tip from “language server protocol” (LSP). I found this to be the most confusing part of understanding MCP, and once I understood it the fundamental ideas of MCP made a lot more sense. So, I’d like to discuss it in depth before we dive into MCP itself.

Model Context Protocol’s Inspiration: Language Server Protocol

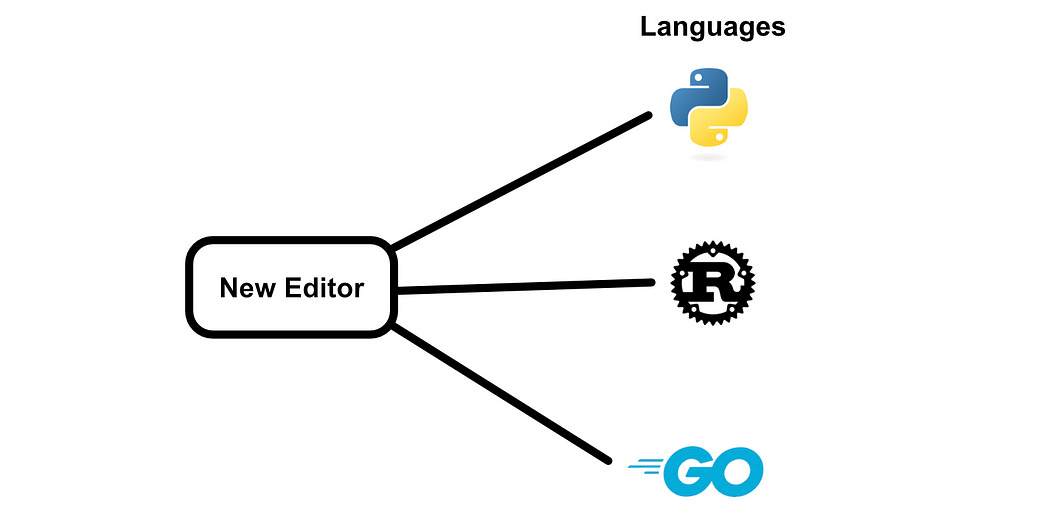

So, the core idea of MCP is to make connecting AI-powered applications to other applications easier. Interestingly, people developing coding languages, and the code editors that interface with those languages, have spent a lot of time grappling with a very similar problem. That’s where “Language Server Protocol” (LSP) comes in.

There are a bunch of coding languages and a bunch of different code editors that different people like to use.

Before LSPs, if you were making an editor you needed to create a custom plugin for every language you wanted to connect to.

Also, if you were releasing a new language, you’d probably want to develop a plugin so that the language is supported by some of the most popular code editors.

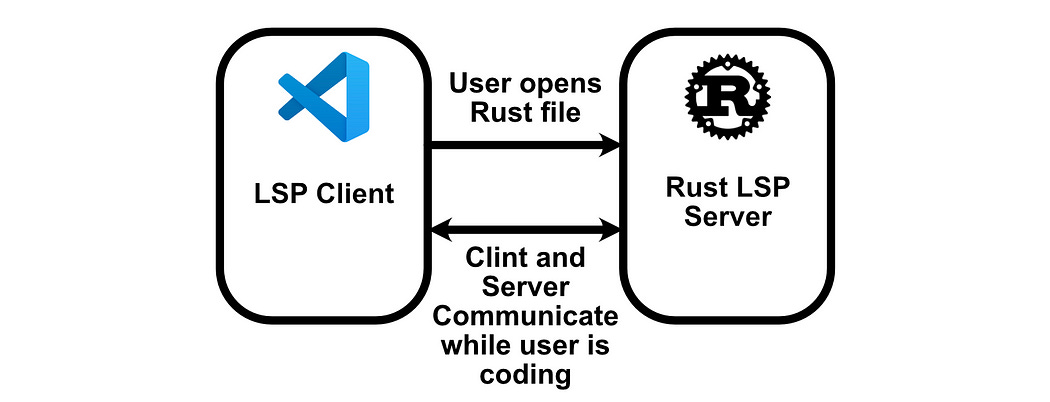

This is a lot of work, and LSP drastically simplified this problem. Essentially, when you’re using LSP there’s a server in between your code editor and the language itself. This server has fixed definitions, meaning if a coding language obeys LSP conventions it can hook up to any LSP editor, and if an editor obeys LSP conventions it can hook up to any LSP-supported coding language.

In LSP we call the code editor a client, and the process that communicates on behalf of the coding language is a server (obeying the client/server verbiage common in many networking applications). Usually, the editor itself spawns the server. When you open a Rust project or file, your editor recognizes that and launches the Rust LSP so you can communicate with that language.

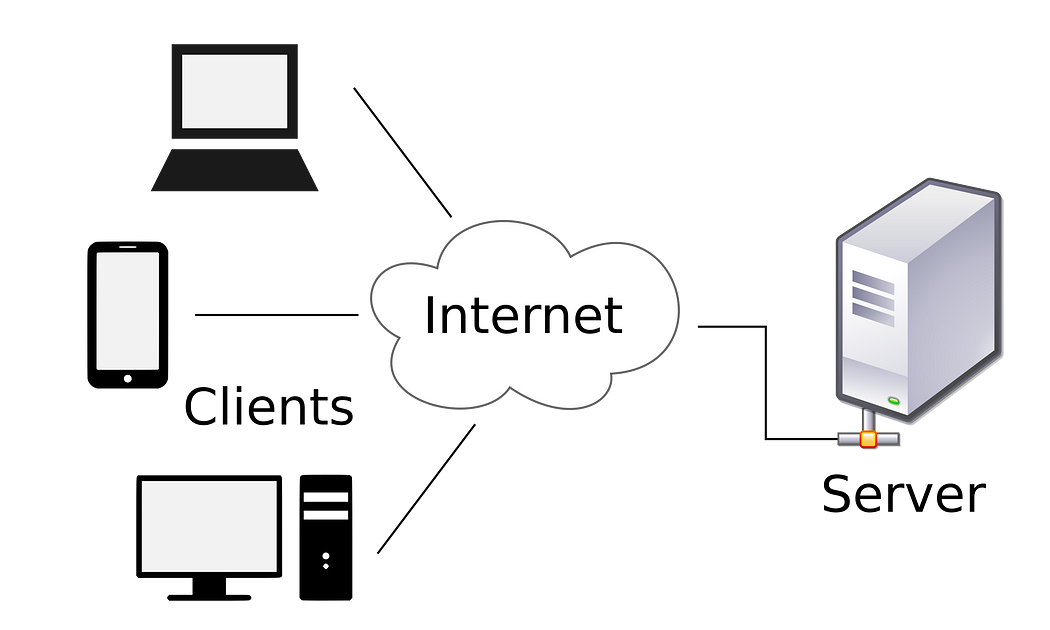

This makes a ton of sense, but it can be kind of counterintuitive if you’re not familiar with LSPs. Normally, developers think of servers from a networking perspective.

With LSPs, the client creates the server, unlike in many applications where the server and client are completely separate entities.

The fundamental goal of Model Context Protocol (MCP) is to allow Claude Desktop to integrate with many applications, kind of like the goal of LSPs is to allow code editors to talk with many languages. Thus, the fundamental approach of MCP is similar to LSP.

In MCP, the typical approach is to define a server and then give it to the client, then the client runs the server and talks with it. The “MCP Server” has code that can execute tools, access resources, and communicate to an “MCP Client” such that the client can access those tools and resources. One important detail, though: MCP does this in a way which is specifically designed for LLMs to understand. While the MCP client is not an AI model itself, the assumption is that the MCP client involves LLMs which will need to control how the MCP client communicates with the MCP server.

Alright, let’s get into it.

Using MCP To Build a Simple Client and Server

Here we’ll be using the MCP Python SDK to create a simple client and a server that exposes simple tools that the client can access. We won't be adding an LLM to the equation yet, we’ll just be focusing on how MCP fundamentally works.

Full code for this example can be found here.

First of all, to set up an environment, I’m using the uv environment and package manager, which is recommended by Anthropic when building and running MCP clients and servers. you can then use uv init . to initialize the directory you’re in as a uv directory, then install MCP with uv add "mcp[cli]" .

We’re then going to create two Python files, one for the server and one for the client. Here’s the implementation for the server:

"""A very simple MCP server, which exposes a single very simple tool

"""

from mcp.server.fastmcp import FastMCP

mcp = FastMCP("server")

@mcp.tool()

def say_hello(name: str) -> str:

"""Constructs a greeting from a name"""

return f"hello {name}, from the server!"We’re creating an MCP server called server , and that server has a single tool called say_hello . This function simply puts a name inside of a formatted string and returns the response.

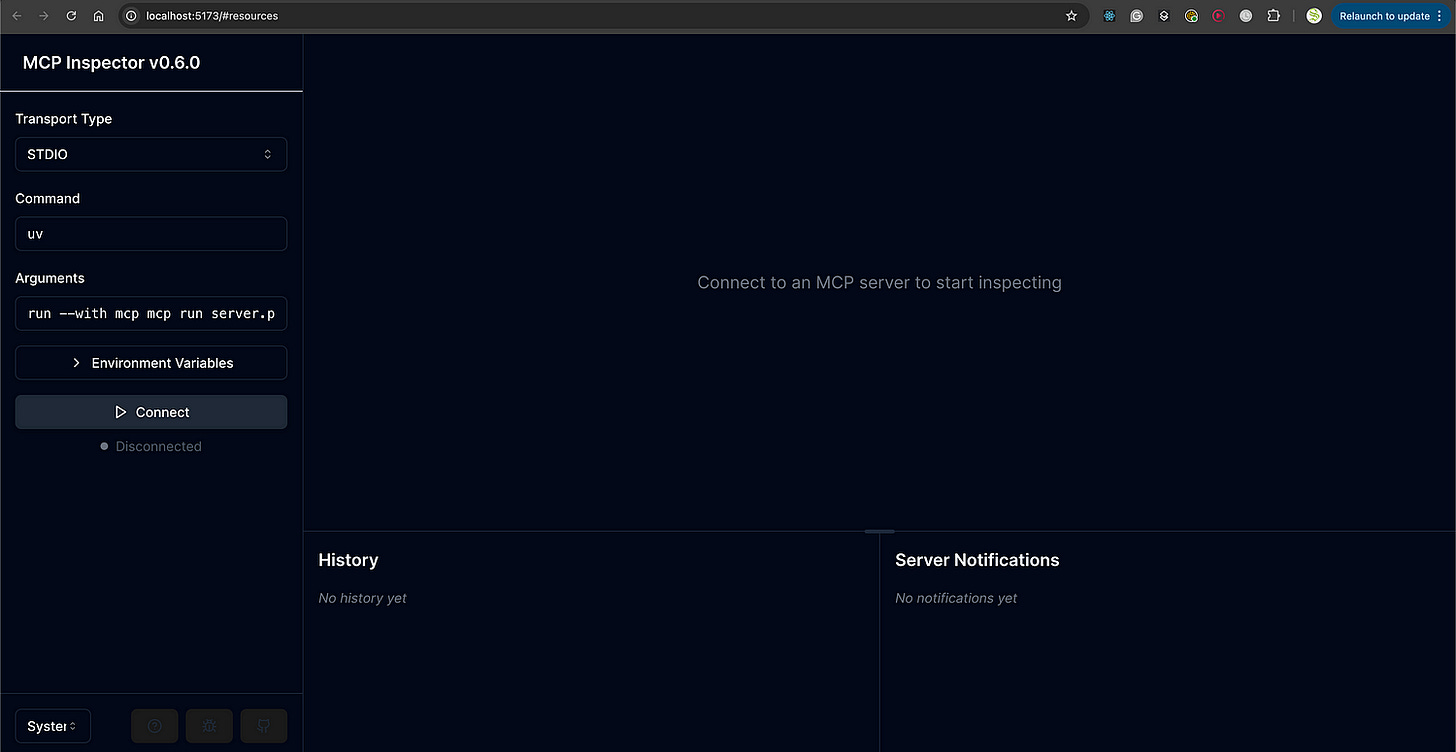

Before we build the client, we can test out this server ourselves with the following command:

uv run mcp dev server.pyHere we’re running server.py in the dev mode of mcp , and we’re using uv run to use the installation of mcp[cli] in our uv environment. This results in the following output:

Starting MCP inspector...

Proxy server listening on port 3000

🔍 MCP Inspector is up and running at http://localhost:5173 🚀and if we click that link, we’ll be greeted with the inspector.

The inspector is a handy little utility that allows you to experiment with your MCP server. We can connect to it by pressing the “connect” button.

Thus we can see the major pieces of the MCP protocol: Resources, Prompts, Tools, Ping, Sampling, and Roots. We’ll be covering all those in a bit. For this example, we can go over to “Tools” and then select “list_tools”.

With that, our say_hello tool will appear. We can click that tool, give it a sample input, and get a response.

So, that’s our super simple server up and running. Let’s make a client that can use it! I’m defining this in a file called client.py

"""A Simple Client which starts up our simple server, conencts to it

and lists out all the tools on the server.

"""

from mcp import ClientSession, StdioServerParameters, types

from mcp.client.stdio import stdio_client

# Create server parameters for stdio connection

server_params = StdioServerParameters(

command="mcp", # Executable

args=["run", "server.py"],

)

async def run():

async with stdio_client(server_params) as (read, write):

async with ClientSession(

read, write

) as session:

# Initialize the connection

await session.initialize()

# List available tools

response = await session.list_tools()

# printing out tools

print(response.tools)

if __name__ == "__main__":

import asyncio

asyncio.run(run())here we’re defining the server as server_params , which describes where the code for the server is and how it should be run. Then, within the run function, we’re launching the server with stdio_client , connecting to it with session.initialize , and communicating with that server to get a list of all of the tools it has.

We can launch the client, which in turn launches the server, with uv run client.py ; resulting in the following output:

[Tool(name='say_hello', description='Constructs a greeting from a name', inputSchema={'properties': {'name': {'title': 'Name', 'type': 'string'}}, 'required': ['name'], 'title': 'say_helloArguments', 'type': 'object'})]As you can see, we get a list of tools consisting of one Tool object which represents the tool we defined in our server! We can execute that tool from the client with the following modifications:

"""Modifying the client to execute the tool on the server

"""

from mcp import ClientSession, StdioServerParameters, types

from mcp.client.stdio import stdio_client

# Create server parameters for stdio connection

server_params = StdioServerParameters(

command="mcp", # Executable

args=["run", "server.py"],

)

async def run():

async with stdio_client(server_params) as (read, write):

async with ClientSession(

read, write

) as session:

# Initialize the connection

await session.initialize()

# List available tools

response = await session.list_tools()

# getting tool name

tool_name = response.tools[0].name # 'say_hello'

# defining tool argument

tool_args = {'name': 'Daniel'}

# calling tool

response = await session.call_tool(tool_name, tool_args)

# printing response

print(response.content)

if __name__ == "__main__":

import asyncio

asyncio.run(run())running uv run client.py results in the following:

[TextContent(type='text', text='hello Daniel, from the server!', annotations=None)]Thus we launched an MCP client, which in turn launched an MCP server, which both worked together to format my name into a greeting!

Obviously, that’s pretty simplistic, the whole idea of MCP is that AI gets involved. Notice how most of the data within the communication obeys a simple structure with a lot of natural text. We’ll be covering how this can be integrated into an LLM later. For now, let’s explore some of the other fields we saw in the inspector.

Building a More Complete MCP Server

Full code can be found here

In this example, we’ll be using all of the major functionality of MCP within a single server. This will employ the three major types of capabilities:

Resources: File-like data that can be read by clients.

Tools: Functions that can be called by the LLM.

Prompts: Pre-written templates that help users accomplish specific tasks

Exactly how LLMs interact with these primitives is ultimately up to whoever is developing the client.

Resources are designed to be application-controlled, meaning that the client application can decide how and when they should be used. Different MCP clients may handle resources differently. For example:

- Claude Desktop currently requires users to explicitly select resources before they can be used

- Other clients might automatically select resources based on heuristics

- Some implementations may even allow the AI model itself to determine which resources to use

source

As MCP is in its early stages, these core primitives will likely evolve as the standard does. However, generally, Resources Are data you can give a model (like with RAG), Tools are functions an AI model can execute, like with an agent, and Prompts are pre-formatted templates that are designed to work well with the resources and tools provided in an MCP server.

Let’s build a simple server that enables all three of the core primitives of MCP.

"""A Simple Server with Tools, Resources, and Prompts

"""

from mcp.server.fastmcp import FastMCP

import mcp.types as types

from mcp.types import AnyUrl

mcp = FastMCP("server")

# ==================== Tool ===================

@mcp.tool()

def add(a: float, b: float) -> float:

"""adds two numbers together.

Args:

a: a number

b: a number

"""

return a+b

# ==================== Resources ===================

@mcp.resource("file://pi.txt")

async def read_resource() -> str:

with open('pi.txt', 'r', encoding='utf-8') as file:

pi_digits = file.read()

return pi_digits

# ==================== Prompts ===================

@mcp.prompt()

def add_to_last(number:float) -> str:

"""Creates a prompt that will encourage the agent to use proper tooling

to add a number to the final provided digit of Pi."""

return f"read the pi resource and add {number} to the final digit using the add tool."And we can build a client that will list out all of the available tools, resources, and prompts.

from mcp import ClientSession, StdioServerParameters, types

from mcp.client.stdio import stdio_client

# Create server parameters for stdio connection

server_params = StdioServerParameters(

command="mcp", # Executable

args=["run", "server.py"],

)

async def run():

async with stdio_client(server_params) as (read, write):

async with ClientSession(

read, write

) as session:

# Initialize the connection

await session.initialize()

# List available tools

response = await session.list_tools()

print('\n\ntools:')

print(response.tools)

# List available resources

response = await session.list_resources()

print('\n\nresources:')

print(response.resources)

# List available prompts

response = await session.list_prompts()

print('\n\nprompts:')

print(response.prompts)

if __name__ == "__main__":

import asyncio

asyncio.run(run())Running uv run client.py results in the following output:

tools:

[Tool(name='add', description='adds two numbers together.\n\nArgs:\n a: a number\n b: a number\n', inputSchema={'properties': {'a': {'title': 'A', 'type': 'number'}, 'b': {'title': 'B', 'type': 'number'}}, 'required': ['a', 'b'], 'title': 'addArguments', 'type': 'object'})]

resources:

[Resource(uri=AnyUrl('file://pi.txt/'), name='file://pi.txt/', description=None, mimeType='text/plain', size=None, annotations=None)]

prompts:

[Prompt(name='add_to_last', description='Creates a prompt that will encourage the agent to use proper tooling\nto add a number to the final provided digit of Pi.', arguments=[PromptArgument(name='number', description=None, required=True)])]Without hooking up an AI model just yet, we can use the resources, tools, and prompts in our server manually in a way that’s similar to how an AI agent might choose to work with MCP.

"""Interfacing with our server manually, similarly to how an AI Agent might

"""

from mcp import ClientSession, StdioServerParameters, types

from mcp.client.stdio import stdio_client

# Create server parameters for stdio connection

server_params = StdioServerParameters(

command="mcp", # Executable

args=["run", "server.py"],

)

async def run():

async with stdio_client(server_params) as (read, write):

async with ClientSession(

read, write

) as session:

print('simulating asking the client to add 1 to the last digit in pi.txt...\n')

print('\n======== Listing Resources =========')

# Initialize the connection

await session.initialize()

# List all available things

response = await session.list_tools()

print('\ntools:')

print(response.tools)

response = await session.list_resources()

print('\nresources:')

print(response.resources)

response = await session.list_prompts()

print('\nprompts:')

print(response.prompts)

number_to_add = 1

print('\n======== Getting Prompt =========')

# Getting the add_to_last prompt

prompt = await session.get_prompt(

"add_to_last", arguments={"number": str(number_to_add)}

)

print(prompt.messages[0].content.text)

print('\n======== Getting Resource =========')

# Reading the pi.txt file

_, mime_type = await session.read_resource("file://pi.txt/")

pi_digits = mime_type[1][0].text

print(pi_digits)

print('\n======== Adding to last digit =========')

#adding digits together

last_digit = int(pi_digits[-1])

result = await session.call_tool("add", arguments={"a": str(last_digit), "b": str(number_to_add)})

print(result)

if __name__ == "__main__":

import asyncio

asyncio.run(run())I put this in a client called client2.py , which we can run with uv run client2.py

simulating asking the client to add 1 to the last digit in pi.txt...

======== Listing Resources =========

tools:

[Tool(name='add', description='adds two numbers together.\n\nArgs:\n a: a number\n b: a number\n', inputSchema={'properties': {'a': {'title': 'A', 'type': 'number'}, 'b': {'title': 'B', 'type': 'number'}}, 'required': ['a', 'b'], 'title': 'addArguments', 'type': 'object'})]

resources:

[Resource(uri=AnyUrl('file://pi.txt/'), name='file://pi.txt/', description=None, mimeType='text/plain', size=None, annotations=None)]

prompts:

[Prompt(name='add_to_last', description='Creates a prompt that will encourage the agent to use proper tooling\nto add a number to the final provided digit of Pi.', arguments=[PromptArgument(name='number', description=None, required=True)])]

======== Getting Prompt =========

read the pi resource and add 1 to the final digit using the add tool.

======== Getting Resource =========

3.141519

======== Adding to last digit =========

meta=None content=[TextContent(type='text', text='10.0', annotations=None)] isError=FalseHere, we created the prompt read the pi resource and add 1 to the final digit using the add tool , which we defined in our MCP server, and then looked up the content of pi.txt . Then, we used the add tool provided by our MCP server to add the last digit of pi with the number 1.

You might notice, if you’re familiar with LLM agents, this is starting to look remarkably similar to common agent design approaches. The whole idea of MCP is that, instead of us programmatically talking with the MCP server, we can allow an AI model to do it on our behalf based on some user's query. Let’s go ahead and do that now.

Building an AI-Powered Client

The actual agentic design within an AI client can be complex, but ultimately obeys the same design principles as agents outside of an MCP context.

Ultimately, client developers are at liberty to specify how resources, prompts, and tools are provided to an agent, and how that agent feeds those into an LLM in turn. I’ll likely be playing around with MCP in depth in future articles, so for now we can keep it abstract.

I particularly like this example by sidharthrajaram. There’s some complicated stuff going on which we’ll discuss, but from a tool use perspective, it’s fairly elegant.

async def process_query(self, query: str) -> str:

"""Process a query using Claude and available tools"""

messages = [

{

"role": "user",

"content": query

}

]

response = await self.session.list_tools()

available_tools = [{

"name": tool.name,

"description": tool.description,

"input_schema": tool.inputSchema

} for tool in response.tools]

# Initial Claude API call

response = self.anthropic.messages.create(

model="claude-3-5-sonnet-20241022",

max_tokens=1000,

messages=messages,

tools=available_tools

)

# Process response and handle tool calls

tool_results = []

final_text = []

for content in response.content:

if content.type == 'text':

final_text.append(content.text)

elif content.type == 'tool_use':

tool_name = content.name

tool_args = content.input

# Execute tool call

result = await self.session.call_tool(tool_name, tool_args)

tool_results.append({"call": tool_name, "result": result})

final_text.append(f"[Calling tool {tool_name} with args {tool_args}]")

# Continue conversation with tool results

if hasattr(content, 'text') and content.text:

messages.append({

"role": "assistant",

"content": content.text

})

messages.append({

"role": "user",

"content": result.content

})

# Get next response from Claude

response = self.anthropic.messages.create(

model="claude-3-5-sonnet-20241022",

max_tokens=1000,

messages=messages,

)

final_text.append(response.content[0].text)

return "\n".join(final_text)Naturally, because Anthropic created MCP, the way you can communicate tool definitions to their Claude family of models is similar to what MCP specifies. In this example, we ask the client to request a list of tools from the server, and then we re-format them in a way that’s compatible with the anthropic.messages.create function.

response = await self.session.list_tools()

available_tools = [{

"name": tool.name,

"description": tool.description,

"input_schema": tool.inputSchema

} for tool in response.tools]

# Initial Claude API call

response = self.anthropic.messages.create(

model="claude-3-5-sonnet-20241022",

max_tokens=1000,

messages=messages,

tools=available_tools

)Then, if Claude chooses to say stuff we add that to the output we deliver to the user

for content in response.content:

if content.type == 'text':

final_text.append(content.text)But if Claude decides some tool use is required then we execute the tool, add the results to the conversation, and then prompt the model to create a new output based on the tool results.

elif content.type == 'tool_use':

tool_name = content.name

tool_args = content.input

# Execute tool call

result = await self.session.call_tool(tool_name, tool_args)

tool_results.append({"call": tool_name, "result": result})

final_text.append(f"[Calling tool {tool_name} with args {tool_args}]")

# Continue conversation with tool results

if hasattr(content, 'text') and content.text:

messages.append({

"role": "assistant",

"content": content.text

})

messages.append({

"role": "user",

"content": result.content

})

# Get next response from Claude

response = self.anthropic.messages.create(

model="claude-3-5-sonnet-20241022",

max_tokens=1000,

messages=messages,

)

final_text.append(response.content[0].text)You can probably imagine if you’re building a client that works with multiple types of tools, AI models, resources, and prompts, that there might be a lot of leeway in terms of how an AI model or agent interfaces with the capabilities that an MCP server provides.

Let’s talk about some of the more advanced paradigms which MCP affords.

Advanced MCP Functionality

If we open up the MCP Inspector for one of our servers with uv run mcp dev server.py, we’ll find a few things we haven’t yet discussed.

Within the Resources tab, there is a Resource Templates field. Imagine if you had hundreds of files within a folder:

file1.txt

file2.txt

file3.txt

...

file237.txtThe way we defined resources previously, you would have to specify an endpoint for every single file. With resource templates, you can define a template that allows a language model to understand the general structure of these resources and query them dynamically.

We can ping the server, which is a simple way to make sure the server is still up and running.

This can be done with the session.send_ping function (source). There’s also “sampling” which is interesting.

The idea of sampling is to allow the server to request certain output from the client, chiefly in the form of querying the LLM. The docs describe the following functionality:

Sampling is a powerful MCP feature that allows servers to request LLM completions through the client, enabling sophisticated agentic behaviors while maintaining security and privacy.

…

The sampling flow follows these steps:

1) Server sends a sampling/createMessage request to the client

2) Client reviews the request and can modify it

3) Client samples from an LLM

4) Client reviews the completion

5)Client returns the result to the serverThis human-in-the-loop design ensures users maintain control over what the LLM sees and generates. — Source

There’s also the idea of “Roots”

The docs describe roots as:

A concept in MCP that define the boundaries where servers can operate. They provide a way for clients to inform servers about relevant resources and their locations.

So, you can tell the MCP server what files you want it to look at on your computer.

Recall that, fundamental in the way MCP is designed, the client spawns the server. Roots allow the client to constrain what files the server has access to and prioritizes. You might be able to imagine a client that specifies some root for a server, and then the server looks at the files in that root and constructs a resource template that it provides to the model. The model could then interface with that data by requesting certain resources individually.

There’s one more key idea of MCP that we should discuss before we wrap up with final thoughts.

Transports

We’ve been in the weeds of MCP for a while now, let’s zoom out and remind ourselves what’s going on. There’s an MCP client that probably has some AI agent built into it. The MCP client talks to an MCP server. The MCP server's job is to expose tools and resources to the MCP client.

The actual communication protocol between the MCP client and Server is called the transport. This lays the fundamental bedrock of how the MCP client and server send messages back and forth.

This isn’t the type of thing you need to worry about if you’re building simple servers that can plug into Claude Desktop, but it has massive implications in terms of how this technology can be integrated into more sophisticated systems.

Standard Input/Output (stdio) is the default transport used by most servers in MCP. In the Python SDK, this uses sys.stdin and sys.stdout via the AnyIO library. Depending on your operating system this might function slightly differently, but the essential idea is that a pipeline is set up between your MCP server and client, allowing the two processes to send data back and forth. This approach very much assumes that both the client and server are operating on the same machine.

Server Side Events (SSE) are another transport that uses HTTP Post requests. HTTP is an Internet communication standard that is designed to allow isolated clients and servers to communicate over the Internet. You’re reading this blog post right now through an HTTP connection.

Running MCP with HTTP has some compelling benefits. I discussed an MCP example by sidharthrajaram previously, this example uses HTTP to allow the MCP client and server to be started independently and connect at will. Recall that the normal workflow is that the client starts the server.

If you were building some application that you wanted to be “discovered” by MCP clients, you can use the HTTP transport to expose your MCP server on a port on your computer, which can then be connected to by an MCP client at will.

You could also, in theory, put your MCP server online, allowing MCP clients to connect to it over the Internet.

Imagine if, instead of needing to install an MCP server on your computer, you could use your client to connect to an MCP server that’s hosted online. Similarly to how you use web browsers to connect to websites, MCP could allow AI agents to connect to MCP servers, understand them, and use them.

I think this ties nicely into some of my thoughts about MCP.

Closing Thoughts

MCP is only a few months old, and people are already imagining it as the fundamental center of AI application development moving forward. It seems like that’s something Anthropic might be interested in.

MCP is an open protocol that standardizes how applications provide context to LLMs. Think of MCP like a USB-C port for AI applications. Just as USB-C provides a standardized way to connect your devices to various peripherals and accessories, MCP provides a standardized way to connect AI models to different data sources and tools. — Source

The issue is, it’s kind of hard to understand what MCP is, and what it’s really designed to do. For instance, these are a few things that I think MCP defines, just off the top of my head:

MCP defines that LLMs conceptualize interfacing with external systems as “tools”

MCP defines a way to represent those tools

MCP defines that there are also resources and prompts and that those are the best conceptualization for how these types of information should be organized

MCP defines that LLMs should be implemented in MCP clients, and those should interact with MCP servers which provide resources

MCP sets a communication standard between those clients and servers on local devices

MCP sets a communication standard between those clients and servers online (maybe)

MCP assumes that prompting can be split, without firm definitions, between client and server maintainers

The fundamental bedrock from which MCP makes these definitions is not bedrock at all, but rather a shifting sand of AI R&D. While tool use seems like a common approach, some research suggests the way we’re conceptualizing tools may be wrong in some fundamental ways. It’s possible the standard way people interface with LLMs might fundamentally change in the near future, leaving a question of whether MCP might still be relevant.

More immediately, though, there’s a disjoint between what MCP can do, what it’s designed to do, and what people say it's for. While researching this article I heard a lot of rhetoric like this:

“MCP is the USB of AI”

“MCP is the HTTP of AI”

“MCP standardizes the way AI agents are defined”

“With MCP, AI will have its own way of talking to servers online”

“MCP is the future of AI connectivity”

“MCP is gaining widespread adoption in its ability to connect systems to AI”

Frankly, this caused some frustration on my end. I’m pumping these articles out, so eating up days of research just to be able to answer the high-level question of “what and why is MCP” was not ideal. I imagine that might also not be ideal for developers who are tasked with implementing this type of technology in time and under budget, and I don’t think the problem is helped by the flexibility and looseness of MCP in general.

Think of MCP like a USB-C port for AI applications. — Source

Like, what does that even mean?

When people say MCP has seen “widespread adoption”, they’re usually referring to repos like this or like this which, a staggering percentage of the time, feature light modifications derived from examples in Anthropic’s MCP repo which are designed to be loaded up in the Anthropic MCP client, Claude Desktop.

This is super cool and interesting, but even an infinite number of, what essentially amounts to addons for Claude Desktop, will not prove that MCP is the right standard for connecting AI to an internet of servers. Despite that, it’s common rhetoric that that’s precisely what’s happening right now. Because MCP supports multiple transport methods, it’s certainly possible that MCP could support this type of growth, but saying that it already has is a common misconception.

My honest take is that Anthropic designed MCP so that people would make addons for Claude Desktop, and then open-sourced it to make it more widely adopted. I think that’s a smart business move, and I’m happy open source software is in line with their financial goals. The reality is that, based on the early support and documentation thus far, Anthropic’s chief interest seems to be in improving MCP so that Claude Desktop gains more adoption.

The thing is, though, I don’t think Anthropic expected to step right next to a goldmine.

MCP seems to have sparked in the imagination of many that AI systems will be able to natively connect to websites and online services, enabling a new era of AI interconnectivity that will open new markets and new entrepreneurial opportunities. If your nontechnical boss asks you what “MCP” is, that’s why.

Clearly Anthropic was interested in this being a possibility, HTTP is a built-in transport layer within MCP, but there’s practically very little documentation or development around that workflow. The question is will Anthropic pivot MCP to seize this opportunity, or will they stay focused on local connectivity?

Join Intuitively and Exhaustively Explained

This article was originally posted on and is made available due to the support of the subscribers of Intuitively and Exhaustively Explained.

At IAEE you can find:

Long form content, like the article you just read

Conceptual breakdowns of some of the most cutting-edge AI topics

By-Hand walkthroughs of critical mathematical operations in AI

Practical tutorials and explainers

Great read! Thank you for the good summary and easy to follow along.

I am looking forward to more good articles!

Not for Everyone. But maybe for you and your patrons?

Dear Daniel,

I hope this finds you in a rare pocket of stillness.

We hold deep respect for what you've built here—and for how.

We’ve just opened the door to something we’ve been quietly handcrafting for years.

Not for mass markets. Not for scale. But for memory and reflection.

Not designed to perform. Designed to endure.

It’s called The Silent Treasury.

A sanctuary where truth, judgment, and consciousness are kept like firewood—dry, sacred, and meant for long winters.

Where trust, vision, patience, and stewardship are treated as capital—more rare, perhaps, than liquidity itself.

The two inaugural pieces speak to a quiet truth we've long engaged with:

1. Why we quietly crave for 'signal' from rare, niche sanctuaries—especially when judgment must be clear.

2. Why many modern investment ecosystems (PE, VC, Hedge, ALT, SPAC, rollups) fracture before they root.

These are not short, nor designed for virality.

They are multi-sensory, slow experiences—built to last.

If this speaks to something you've always felt but rarely seen expressed,

perhaps these works belong in your world.

Both publication links are enclosed, should you choose to enter.

https://tinyurl.com/The-Silent-Treasury-1

https://tinyurl.com/The-Silent-Treasury-2

Warmly,

The Silent Treasury

Sanctuary for strategy, judgment, and elevated consciousness.