The Future is Agentic

An opinion piece by a data scientist

Product engineers have been attempting to wrangle AI into mainstream applications for a while now. However, despite the remarkable power of large language models, many companies have been largely unsuccessful at revolutionizing their products using LLMs.

The goal for many is to make an AI system that can function as a true virtual assistant, à la Tony Stark’s JARVIS but for your favorite app. Despite trillions of dollars of investment across various organizations, however, true integration success stories are few and far between.

It’s quickly becoming apparent that, while LLMs are exciting, they’re not a silver bullet. AI needs clever designers to wrangle it into a focused and powerful offering so it can actually be useful to consumers. Agentic systems seem to be the shining north star towards building successful LLM powered applications.

Naive AI

Before we get into agentic systems, let’s take a look at a more simplistic example of LLM usage.

The most fundamental way to use a language model is via a process called “autoregressive generation”. Basically, you give a language model some input, and the language model predicts the output one word at a time until it decides to stop.

The fact that the world is being shaken up by glorified next word predictors can be a surprising realization. Some people, like OpenAI’s chief scientist Ilya Sutskever, think next word prediction is enough to achieve systems that are generally intelligent. I disagree.

Regardless, it’s pretty clear that language models simply aren’t enough by themselves right now. If they were, we would be using them consistently for much more than just chat bots.

The Problems With Naive AI

When I sat down to write this article, I vaguely followed these steps:

I came up with the core idea, which was largely inspired by some of the work I’ve been doing professionally.

I started by making a title image, and work shopped the title and intro. That set the tone and core idea of the article.

I wrote a bit of it, wasn’t happy, and re-worked the intro to be more focused.

As the structure started to come together, I used a few external references to refine and reinforce some of my ideas

I went through the whole article numerous times to refine the flow and trim out fluff.

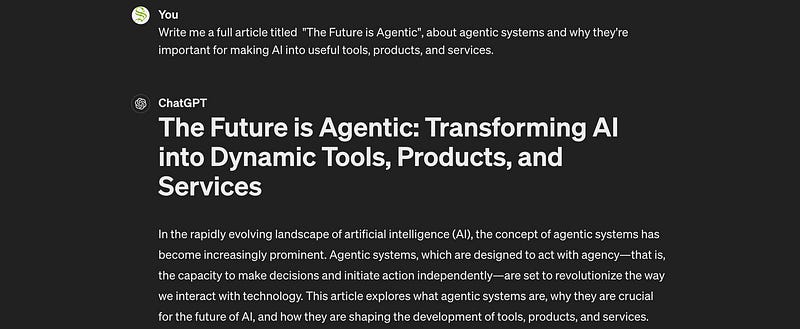

If you asked an AI system like ChatGPT to write this article it would attempt to construct the entire article, word by word, in one shot.

Most language models will actually do a fair job with the introduction, which makes sense; LLMS have seen millions of articles throughout the training process, and understand enough about the english language to introduce a topic.

However, the output almost always devolves into a superficial and boring list of information, regardless of how fancy a prompt you give it. That’s because outputting an article word by word with no backspace or revision is not the best way to write an article.

Some tasks are totally possible using only next word prediction. You can ask ChatGPT questions like “summarize this email”, or “can you please answer this simple question”, and you might get useful answers. However, as it turns out, many of the problems humans actually care about solving require something more complex than next word prediction.

There’s another problem as well.

even if you could make an AI system which can think in a way that’s more complex than next word prediction, who cares? Imagine you spent a gazillion dollars and made a crazy intelligent AI system that was smarter than any person in the world. Then imagine if you asked it about the weather.

Even with all the intelligence in the world, the AI system doesn’t know where you are, it can’t access the weather forecast, and it doesn’t even know what today is. A language model can learn a bunch about all the information under the sun, but it can only converse about information that it had access to during training time. Genius isolated is of limited utility.

Thus, at least right now, language models are plagued by two big problems holding them back.

Even though they’re large and sophisticated, their reasoning is excessively simple. Many problems are too complex to be solved by guessing a response one word at a time.

Even if you did make a language model capable of complex thought, it wouldn’t be useful in many applications because language models can only converse linguistically about data from the past.

Both of these problems are being addressed, simultaneously, with agentic systems.

What is an Agentic System?

Agentic systems are a hot topic right now, and the exact specifics change regularly. However, from it’s highest level, the idea is to use language models to construct an “Agent”.

Imagine if, instead of asking a language model to give you some output immediately, you asked a language model to do things like this:

“You’ve been given a complex question, think about what to do next.”

“You have access to a few tools, think about which one you can use them to best assist the user.”

“You just output some information. Was it correct? Would you like to revisit that idea or move on?”

Essentially, Agents create a framework which allows a language model to reason about it’s previous output and decide to use tools to seek external sources of information.

For example, in a previous article I wrote on the subject of agents, I asked the agent to find the distance between the northernmost and southernmost towns in the world. This was the response:

0 - Question:What is the distance between the most northern town in the world, and the most southern town in the world?

1 - Thought: The most northern town in the world is Longyearbyen, Svalbard, Norway; the most southern town is Puerto Toro, Chile. I need to search for the distance between Longyearbyen and Puerto Toro.

2 - Action: BSearch[distance between Longyearbyen and Puerto Toro]

3 - Observation: The distance between Longyearbyen, Svalbard, Norway, and Puerto Toro, Chile is not directly provided in the search result. However, with Longyearbyen being situated at 78°13′ N and 818 miles from the North Pole, and Puerto Toro located at 55°05′00″S on the eastern coast of Navarino Island, one would need to utilize a distance calculator tool or perform a more targeted search to determine the direct or travel distance between these two locations.

4 - Thought: I need to search for a more direct answer or use a geographic distance calculator to determine the distance between Longyearbyen and Puerto Toro. Since I don't have access to such a tool, I can only perform a search. I will adjust the search terms to hopefully yield a result that directly states the distance.

5 - Action: BSearch[distance from Longyearbyen, Norway to Puerto Toro, Chile]

6 - Observation: The distance between Longyearbyen, Norway, and Puerto Williams, Chile (close to Puerto Toro), is approximately 15784.4 kilometers (km). Note that this figure may represent a direct air travel distance and actual travel distance could vary depending on the route taken.

7 - Thought: The distance provided is for Longyearbyen to Puerto Williams, which is near Puerto Toro. The actual distance would be very slightly more than this, but for most intents and purposes, this distance would suffice as a good estimate for the distance between the most northern and the most southern towns in the world. The answer is approximately 15,784.4 kilometers.

8 - Action: Finish[Approximately 15,784.4 kilometers]The “Question” was asked by me. “Thoughts”, “Actions”, and “Observations” were created by an LLM automatically without, human input. In this case “BSearch” is a tool that I made which allows the agent to search Bing. An agent is essentially code which is designed to encourage this type of behavior.

If you read through the example above you might observe two things:

The agent was remarkably capable in terms of adapting to missing information, and expressed a remarkable ability to adapt organically to challenges

The thought, action, and observation cycle of the agent was very simplistic and repetitive.

Agents are still rigid and simple relative to how a human might think through a problem. Even in their simplistic form, however, the idea of agentic systems is proving to be massively impactful in allowing language models to be useful in more complex applications. It’s likely that, as agents advance, LLMs will be integrated into more useful tools we will use in our daily lives.

Conclusion

Language models are an incredibly powerful tool, but they have some glaring flaws which makes applying them to real world applications surprisingly difficult. Agents, while new and still relatively simplistic, have emerged as an incredibly powerful design paradigm which can overcome some of the naive problems of language model usage.

If you’re interested in Agents, and want a more technical understanding, feel free to check out my technical deep dive on the subject:

LLM Agents — Intuitively and Exhaustively Explained

This article focuses on “Agents”, a general concept that allows language models to reason and interact with the world. First, we’ll discuss what agents are and why they’re important, then we’ll take a look at a few forms of agents to build an intuitive understanding of how they work, then we’ll explore agents in a practical context by implementing two of them, one using LangChain and one from scratch in Python.